Query Engine

Query engine is a quick query module which enables analysis of data in Amazon S3 using standard SQL. The User can generate a sample query from the existing Datasets.

The user can run SQL codes here to see or analyze the contents of datasets. User can also keep track of the history of query executions

Below is the image depicting the components in the Query Engine view

The user needs to select the Domain and the Dataset from the dropdowns, on which he/she wants to run the query on. User can generate a sample query by clicking on "Generate Sample Query" button next to Dataset Dropdown. After this a sample qury refering to the dataset will appear on the query editor.

Query Engine dropdowns will display only the user-accessible domains/datasets/views.

Below is the graphic demonstrating the query creation, view results, download results and historic list of executions.

Query Editor

Query editor is a space where User can type the SQL query he/she want to perform on the selected dataset. The query should be in SQL format.

User can click on clear button, to the botton right corner of the editor, to clear the editor.

Generate Sample Query

Incase of not knowing what to write in the query editor, the User can click on generate sample query, which generates a sample query appears in the editor giving idea on the structure of the query.

For a Multi tenancy deployment, Amorphic provides user capabilities to run cross tenant/database queries. You can query other tenant objects using fully qualified object names expressed with the three-part notation. The full path to any tenant object consists of three components: tenant name, schema, and name of the object. You can access any object from any other tenant/database using the full path notation, tenant_name.schema_name.object_name. To access a particular column, use tenant_name.schema_name.object_name.column_name.

If you encounter any issues regarding datatypes it might be due to usage of different datatypes other than the supported ones. Please check the AWS Athena supported datatypes.

Select Workgroup

This dropdown provides functionlity to users to select the workgroup on which he wants to make athena queries. Default value is 'primary' if user wants to query on governed datasets, he must select the 'AmazonAthenaLakeFormation' workgroup to avoid the permissions issue.

Governed datasets are queried by selecting AmazonAthenaLakeFormation workgroup

Other datasets can be queried by selecting default value primary workgroup

Query Results

Post the Query Run is successful, the user can either review the result on amorphic platform or can download the file to use on external platform. Both the options will apear on the console page once the query is executed.

Clicking the download option will download the query results in the csv format into Users local system.

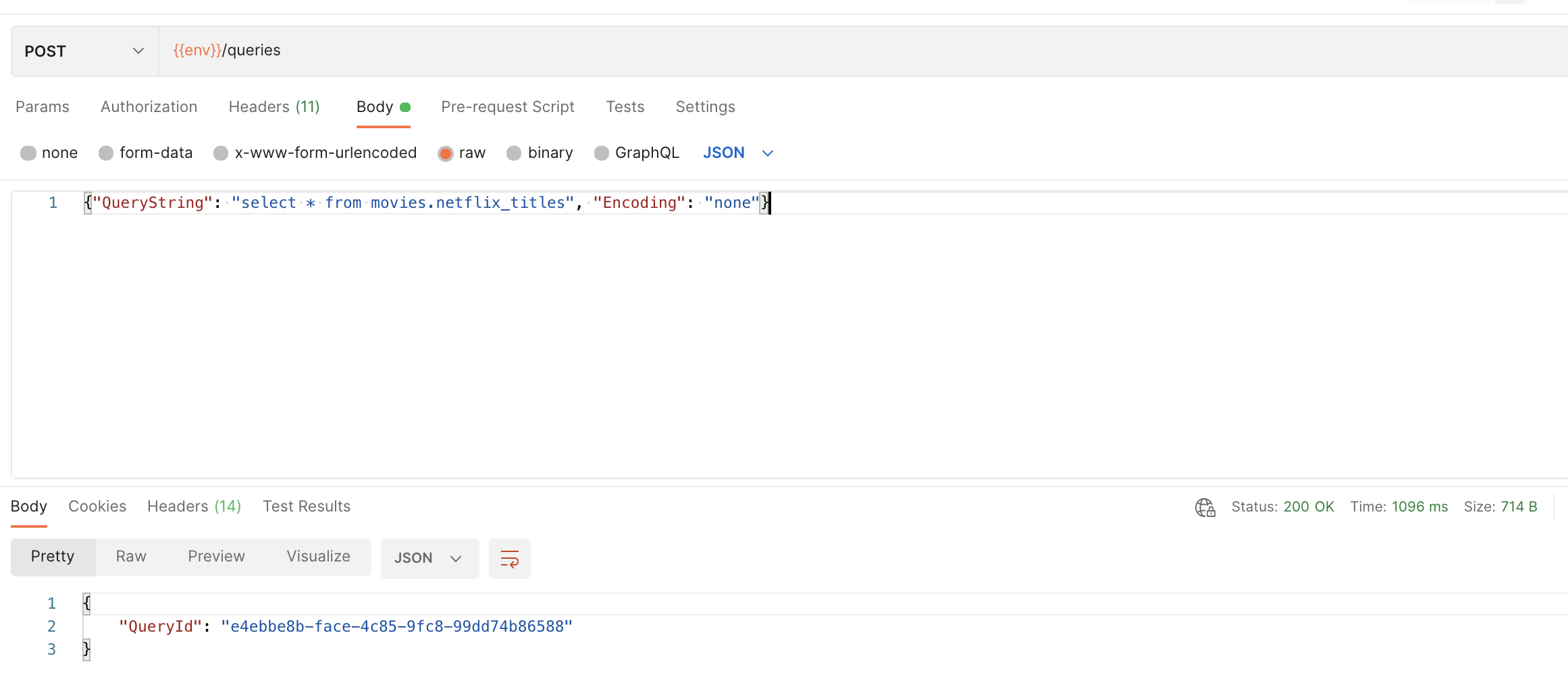

API Usage

Users can peform POST call on /queries to run queries against query engine. Following are the parameters required:

- QueryString

- Encoding

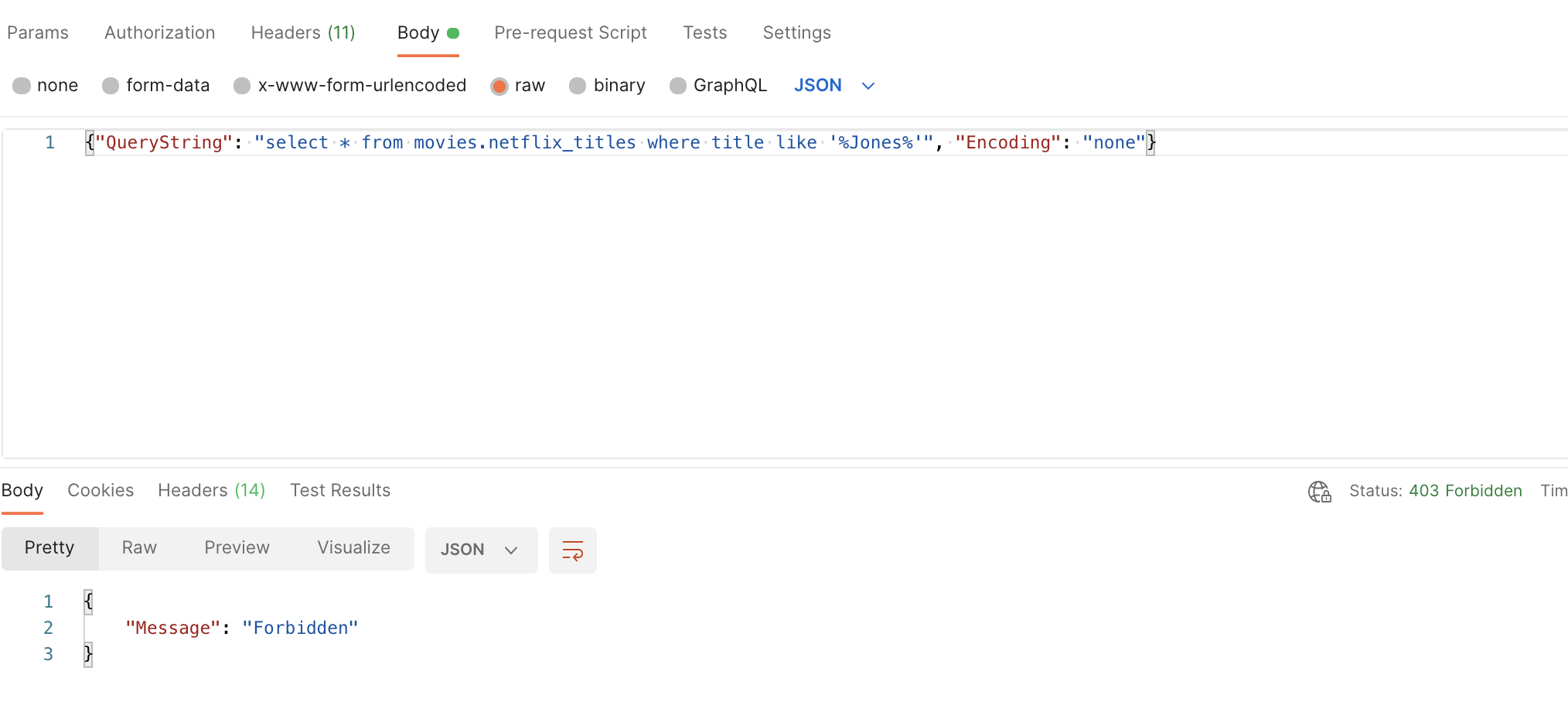

Valid values for encoding are "base64" and "none". Most of the queries can be run by using encoding as "none". However sometimes users may face forbidden issue because of WAF rules if querystrings contain strings like '%%'. To get around this issue, we suggest users to use encoding as "base64" and provide a "base64" encoded querystring.

Query Engine showing forbidden message:

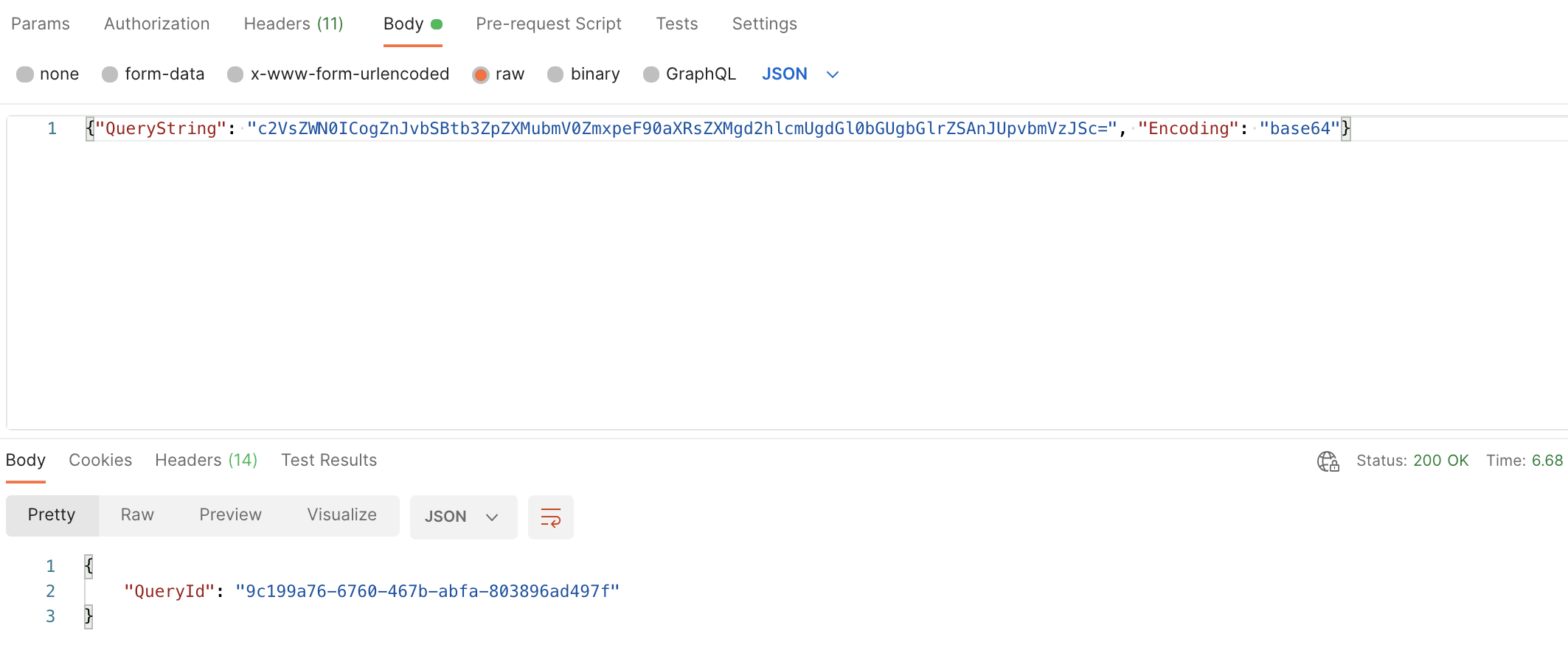

So we can encode QueryString to base64 format and use provide encoding value "base64" as shown below:

So we can encode QueryString to base64 format and use provide encoding value "base64" as shown below:

History

User will be able to see the listing of all the historic query executions in the History tab right next to query editor

Common Errors

Amorphic Query Engine uses AWS Athena service in the back end. Sometimes query might fail and user might face common errors. These errors most likely due to data-schema mismatch, invalid SQL commands etc.

For more details on errors: https://docs.aws.amazon.com/athena/latest/APIReference/CommonErrors.html

For more details on Hive data and metadata errors, please follow the link and search for the specific error: https://forums.aws.amazon.com/forum.jspa?forumID=242&start=0