Delta Lake Datasets

In Amorphic, User can create Delta Lake datasets with Lakeformation target location which creates Delta Lake table in the backend to store the data.

What is Delta Lake?

Delta Lake is open source software that extends Parquet data files with a file-based transaction log for ACID transactions and scalable metadata handling. To learn more, check out the Delta Lake Documentation.

What does Amorphic support?

Amorphic Delta Lake datasets support following features:

- Delta Lake provides ACID transaction guarantees between reads and writes. This means that:

- For supported storage systems, multiple writers across multiple clusters can simultaneously modify a table partition and see a consistent snapshot view of the table and there will be a serial order for these writes.

- Readers continue to see a consistent snapshot view of the table that the Apache Spark job started with, even when a table is modified during a job.

- Schema enforcement: Automatically handles schema variations to prevent insertion of bad records during ingestion.

- Time travel: Data versioning enables rollbacks and full historical audit trails.

- Upserts and deletes: Supports merge, update and delete operations to enable complex use cases like change-data-capture, slowly-changing-dimension (SCD) operations, streaming upserts, and so on.

- Supported file types: CSV, JSON, Parquet

Limitations (Both AWS and Amorphic)

- Supported data types

- Applicable to ONLY 'Lakeformation' Target Location

- Restricted/Non-Applicable Amorphic features for Delta Lake datasets

- DataValidation

- Skip LZ (Validation) Process

- Malware Detection

- Data Profiling

- Data Cleanup

- Data Metrics collection

- Life Cycle Policy

- Currently, No Schema/ Partition evolution is supported by Amorphic for Delta Lake Datasets.

- No time travel support through Query Engine

- Write DML statements like UPDATE, INSERT, or DELETE are not supported through Query Engine

For more limitation, Check Athena Delta Lake Documentation

How to Create Delta Lake Datasets?

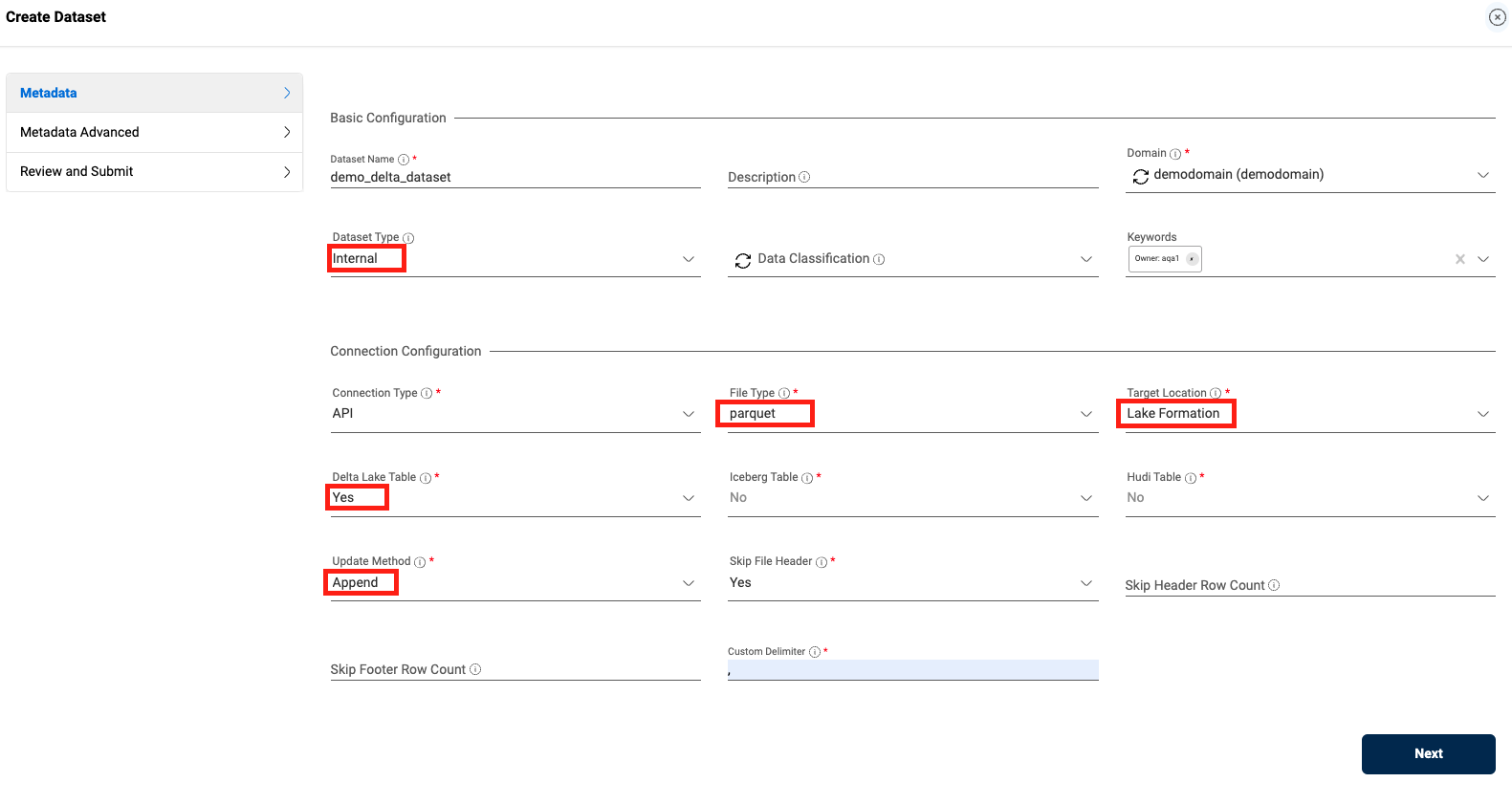

In Amorphic, you can create Delta Lake datasets like lakeformation datasets by selecting Lakeformation as target and file types from any of the following (csv, json, parquet), and Yes in 'Delta Lake Table' dropdown. Update method can be either 'append' (will append data to the existing dataset) or 'overwrite' (will rewrite the new data on the existing dataset).

Upon successful registration of Dataset metadata, you can specify Delta Lake related information with following attributes:

- Partition Column Name: Partition column name which should be of any column name from schema.

Load Delta Lake Datasets

Uploading data to Delta Lake datasets is like Amorphic's "Data Reloads" where files go into a pending state. Select files in the "Pending Files" option in the Files tab, then processing begins in the backend, taking longer than other types of datasets.

Below options will not be available for Delta Lake dataset:

- 'Add Tags', 'Delete' and 'Permanent Delete' options when completed files are selected in 'Complete Files' File Status dropdown.

- 'Truncate Dataset', 'Download File', 'Apply ML' and 'View AI/ML Results' buttons/options for completed files in Files tab.

You can delete the pending files from 'File Status' dropdown in Files tab.

Query Delta Lake Datasets

Once the data is loaded into Delta Lake datasets, it is available for the user to query and analyze directly from the Amorphic Query Engine feature with some Athena limitations as mentioned above.

In order to access delta lake datasets via notebooks, user needs to run these magic commands in glue session enabled notebooks.

%%configure

{

"--enable-glue-datacatalog": "true",

"--conf" : "spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension --conf spark.sql.catalog.spark_catalog=org.apache.spark.sql.delta.catalog.DeltaCatalog --conf spark.delta.logStore.class=org.apache.spark.sql.delta.storage.S3SingleDriverLogStore",

"--datalake-formats" : "delta"

}