Streams (Beta)

Streams enables the user to stream the data into Amorphic Datasets and can be used for further ETL/Analytics. As a Beta release, Amorphic now enables users to create Kinesis Stream and can be used to push the data to the Kinesis stream.

Kinesis

Kinesis data stream can be created using the '+' icon on the top right of page under Streams section of Ingestion. Following are the fields required to create the Kinesis Stream in Amorphic. Stream creation is an asynchronous process and runs in the background after successful creation request.

| Attribute | Description |

|---|---|

| Stream Name | Name to be used for the stream. |

| Stream Type | Only Kinesis is supported for now. |

| Stream Mode (Optional) | Type of stream. Ex: On-Demand or Provisioned. By default, it'll be Provisioned. |

| Data Retention Period (Hrs) | A period of time during which the data stream retains data records (In hours). |

| Shard Count | No of shards to be added for the stream, Each shard ingests up to 1 MiB/second and 1000 records/second and emits up to 2MiB/second. |

Below image shows how to create the stream.

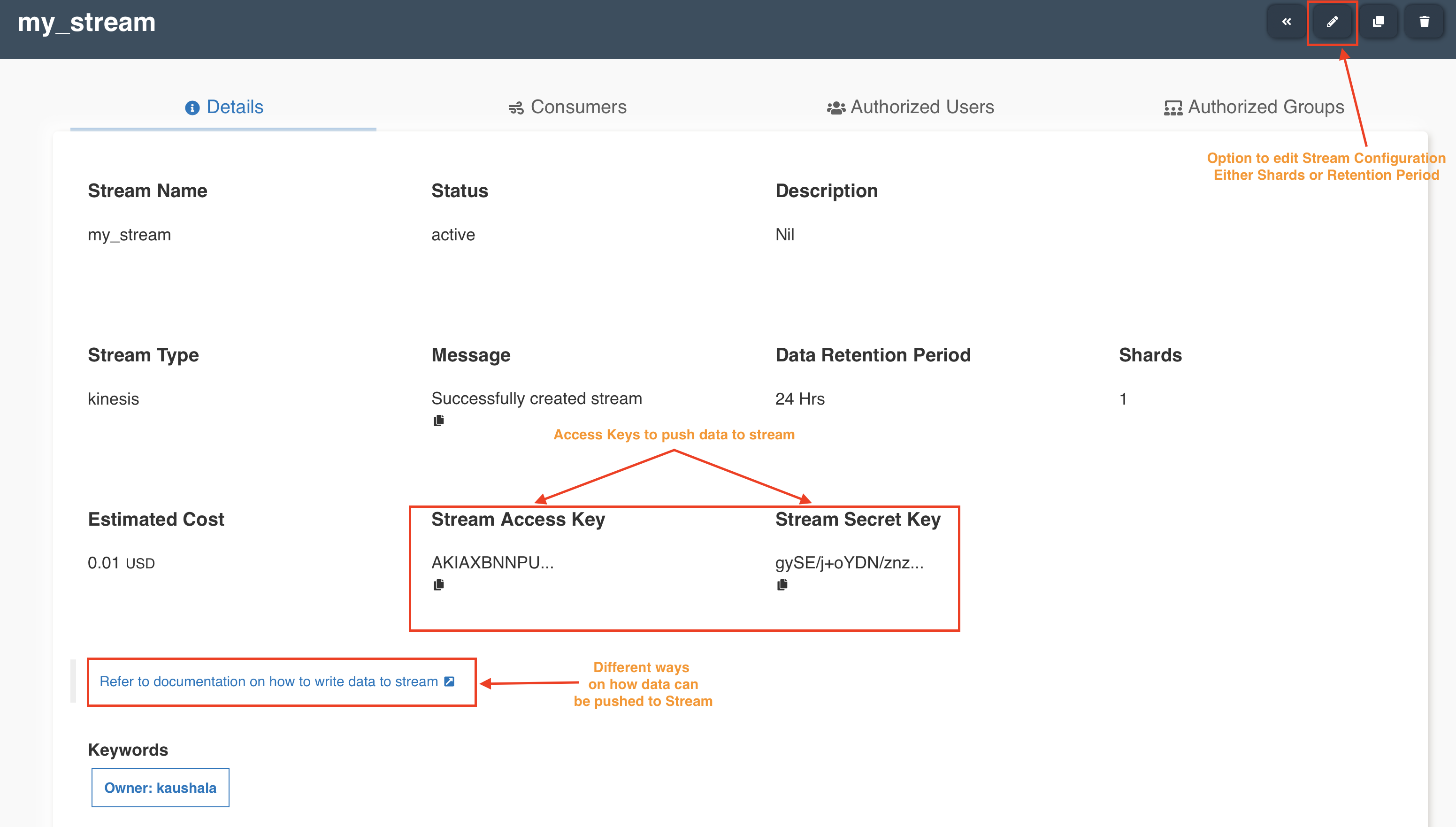

The following picture depicts the Stream details page in Amorphic:

In the same Details page, Estimated Cost of the stream is also displayed to show approximate cost incurred since the creation time. However there are few limitations on this feature as of now, which are -

- User won’t be able to estimate the cost of Streams which have pre-existing Consumers due to missing CreatedTime attribute for any Consumer.

- Estimation of cost will not work for Stream which is older than 3 years.

- Estimation of cost for Kinesis Streams depends on number of shard hours provisioned. Amorphic uses LastModifiedTime in order to calculate the total number of hours for which shards have been provisioned. So even if user updates Description or any other attribute in Streams, cost will then be calculated with respect to modification time and not the creation time. Hence user may be shown estimated cost less than actual cost incurred.

After successful stream creation Amorphic provides AWS access key and secret key which can be used to push the data to the stream. Refer the AWS documentation which describes how to push the data into stream in different ways.

Stream details can be updated by using the edit option from the details page if required. Only Stream metadata & Stream configuration can be updated.

All the datasets associated with a stream can be retrieved using the below API call:

/streams/{id}?request_type=get_datasets & GET method

Consumers

For consumers Amorphic uses Kinesis Data Firehose delivery streams continuously collect and load streaming data into the destinations that you specify. Each consumer is attached to a dataset which is used as the final destination of the data that is collected from stream. Following are the fields required to create a consumer in Amorphic.

| Attribute | Description |

|---|---|

| Consumer Name | Name to be used for the consumer. |

| Buffer Size | Only Kinesis is supported for now. |

| Buffer Interval | A period of time during which the data stream retains data records (In hours). |

| Target Location | Final destination to which the streaming data is to be stored. Currently supported target locations are either Auroramysql or Redshift (based on the database selection during deployment), Lakeformation, S3 and S3 Athena. |

| Create Dataset | Option to create a new dataset or use an existing dataset. |

| Dataset Configuration | Refer (Datasets (Create Dataset)) for details on the fields. |

The Dataset FileType supported for consumer target locations are:

- Auroramysql: CSV

- Redshift: CSV, PARQUET

- Lakeformation: CSV, PARQUET.

- S3 Athena: CSV, PARQUET.

- S3: CSV, OTHERS.

Below image shows how to create a consumer with new dataset.

Below image shows how to create a consumer with existing dataset.

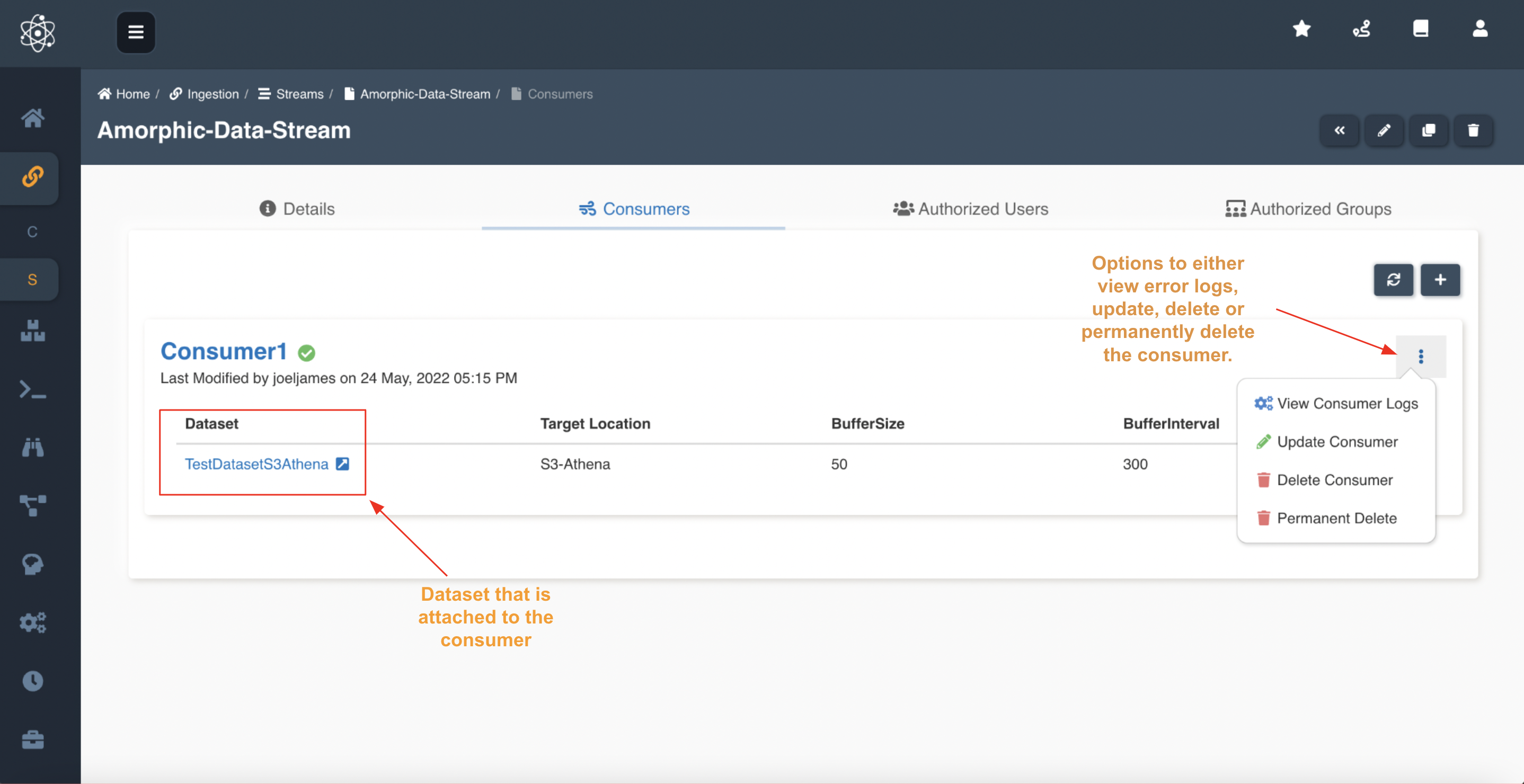

The following picture depicts the Consumer details page in Amorphic:

Consumer configuration(Buffer Size & Buffer Interval) can be updated from the details page if required. If user wants to update the metadata of the dataset then it can be done by navigating to dataset details page and followed by edit.

- With 1.14 release, Dataset Association of consumers that selected

Create New DatasetasNoat the time of consumer creation, can also be updated. - This feature is only supported with API.